Helicone

2024-08-22

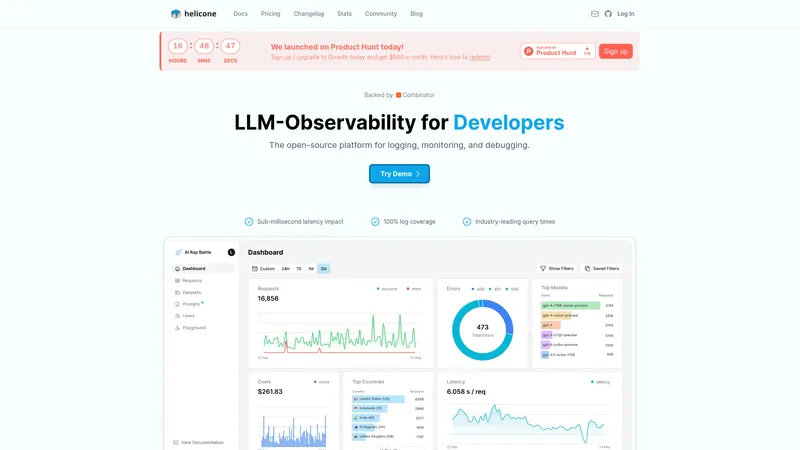

Discover Helicone, the cutting-edge open-source platform for logging, monitoring, and debugging interactions with large language models. Join developers worldwide to optimize your AI workflow with instant analytics, risk-free experiments, and seamless cloud integrations.

Categorieën

AI Code AssistantAI Developer Tools

Gebruikers van deze tool

AI DevelopersData ScientistsResearch InstitutionsStartups integrating LLMsEnterprise organizations

Prijzen

Starter PlanGrowth Plan which includes $500 in creditsEnterprise Plan for larger integrations

Helicone Inleiding

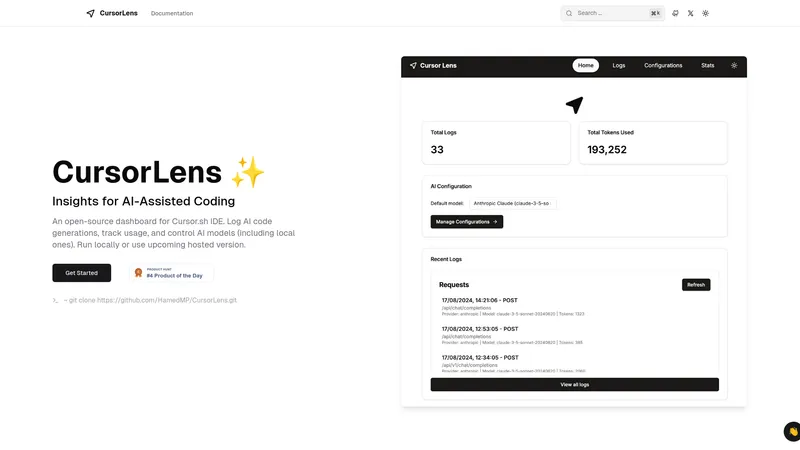

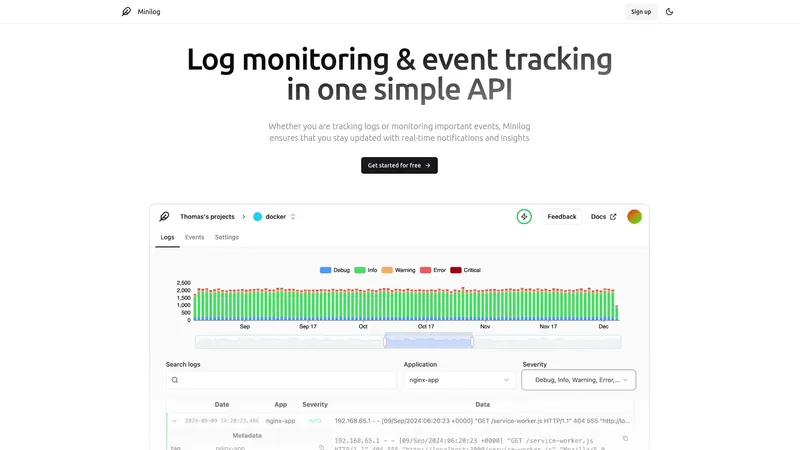

Helicone is the pioneering open-source platform designed specifically for logging, monitoring, and debugging requests made to large language models (LLMs). In an era where AI and machine learning are transforming industries, Helicone provides developers with unrivaled visibility and control over their LLM interactions, enabling them to analyze performance and usage like never before. Leveraging Cloudflare Workers, Helicone ensures sub-millisecond latency, granting users instant access to crucial metrics such as latency, costs, and user engagement. The platform stands out with its commitment to transparency and community involvement, allowing developers to integrate seamlessly with various LLM providers, including OpenAI, Anthropic, and Azure, among others. With powerful features such as prompt management, instant analytics, and risk-free experimentation, Helicone equips developers to optimize their AI workflows efficiently. The platform is not just a tool but a comprehensive ecosystem, encouraging collaboration through an active community on Discord and GitHub. Whether you're a startup or an established enterprise, Helicone is crafted to scale alongside your needs, providing a reliable solution for organizations facing the challenges of modern AI deployment without compromising on performance or usability.

Helicone Belangrijkste kenmerken

- Sub-millisecond latency impact

- 100% log coverage

- Instant analytics

- Prompt management

- Risk-free experimentation

- Custom properties for requests

- Caching to save costs

- User metrics for engagement

- Feedback collection for LLM responses

- Gateway fallback mechanisms

- Secure API key management

Helicone Gebruiksscenario's

- An AI developer integrates Helicone with OpenAI to monitor real-time performance and log requests for debugging, improving the accuracy of their AI responses.

- A data scientist uses the prompt management feature to test various prompts and analyze user interactions with the model, leveraging instant analytics to optimize their usage.

- An enterprise organization implements Helicone to manage millions of logs generated from its AI applications, using the platform to streamline operational efficiency and maintain uptime.

- Research institutions utilize Helicone to conduct risk-free experimentation by evaluating different prompts while ensuring that production data remains unaffected, thus safeguarding integrity.

- Startups integrating LLMs use Helicone's community support to deploy on-prem solutions, benefitting from contributed features to maintain compliance and security.