Langtrace AI

2024-08-07

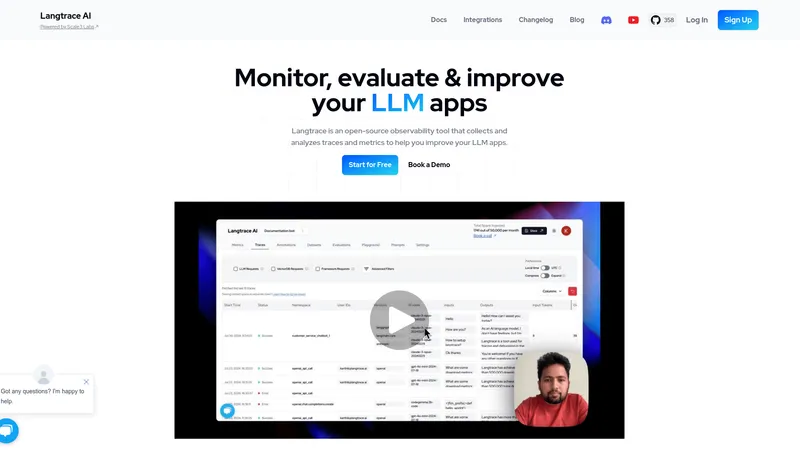

Langtrace AI is your ultimate solution for monitoring and improving Large Language Model applications. Get insights, trace requests, and enhance performance with our open-source observability tool. Secure and efficient. Start for free today!

Catégories

Outils de développement AIConstructeur d'applications AI

Utilisateurs de cet outil

Machine Learning EngineersData ScientistsDevOps ProfessionalsAI ResearchersProduct Managers

Tarification

Free tier with basic featuresPaid subscriptions for advanced features and enterprise support

Langtrace AI Introduction

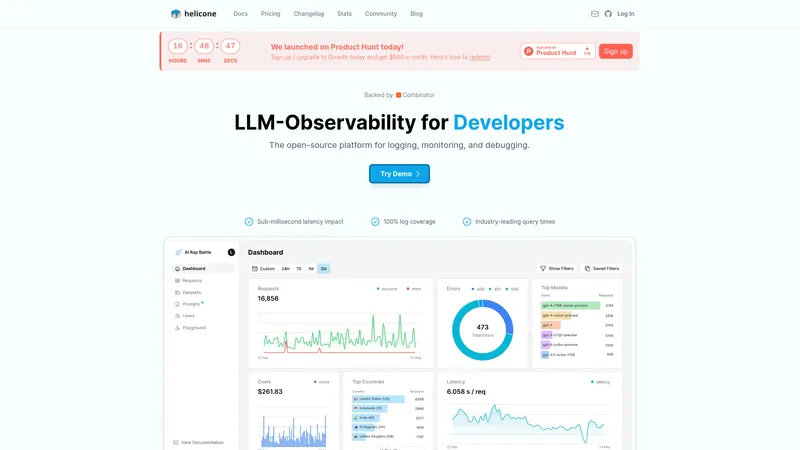

Langtrace AI is an open-source observability tool designed to enhance the development and performance of Large Language Model (LLM) applications. As LLMs become increasingly integral to various tech solutions, Langtrace steps in as a crucial ally for developers, providing them with the means to collect, analyze, and leverage essential metrics and traces. With its commitment to ensuring the highest level of security—evidenced by SOC 2 Type II certification—Langtrace not only focuses on observability but does so while prioritizing user data protection. The platform supports popular frameworks and systems within the machine learning (ML) ecosystem, including OpenAI and Google’s Gemini, facilitating seamless integration via a simple SDK setup. Moreover, its comprehensive features—such as the ability to trace requests, detect bottlenecks, and evaluate performance—make it an indispensable tool for ML engineers, ensuring they can develop, deploy, and optimize LLM apps with confidence and a clear understanding of their functionalities. By promoting the construction of golden datasets and continuous feedback loops, developers can iterate on their applications more efficiently, leading to superior performance metrics and overall user satisfaction. Furthermore, the Langtrace community is encouraged through platforms such as Discord and GitHub, allowing developers to share insights, seek assistance, and continue honing their craft in this ever-evolving field. Langtrace AI stands out in the realm of observability by emphasizing not just analytics but a thorough understanding of the ML pipeline at every level, making it a pioneering solution for LLM application development.

Langtrace AI Fonctionnalités principales

- End-to-end observability of ML pipelines

- Request tracing and bottleneck detection

- Automated evaluations based on LLM interactions

- Annotation of LLM requests to create golden datasets

- Metrics tracking for costs and latency

Langtrace AI Cas d'utilisation

- A machine learning engineer uses Langtrace to trace the performance of their deployed LLM application, identifying bottlenecks and optimizing processing speed.

- Data scientists create golden datasets by annotating and evaluating LLM requests over time, using this to improve model accuracy and reliability.

- DevOps professionals leverage Langtrace to monitor the health of LLM applications in real-time, ensuring minimal downtime and service interruptions.

- AI researchers utilize the performance comparison feature in the Langtrace playground to evaluate how different prompts affect output across varying LLMs.

- Product managers track costs and latency metrics on a per-user level to optimize budgeting and resource allocation for their AI services.